A case study from the English Touring Theatre as a part of the Quality Metrics National Test in the UK.

With very special thanks to English Touring Theatre for sharing their results and insight for this case study.

Does having a collaborative relationship with a host venue have an impact on dimension scores for touring companies?

As a touring company, English Touring Theatre (ETT) had a different experience to many of the other users throughout the Quality Metrics National Test. They were using the metrics and the Culture Counts platform to evaluate one specific work, The Herbal Bed, in different locations across the country. Evaluating one piece of work in different locations enables the organization to compare the reception of the work across locations. And it wasn’t just the audience’s experience; ETT also measured the experience of peer and self assessors in each location.

As the staff were not able to travel to each venue, it was decided that the most practical method to collect public responses would be to use the ‘Online’ method, whereby an email would be sent to attendees after the performance to ask for feedback. In order for this to be successful, ETT needed to rely on the cooperation of the host venues. Offering to share their results with the host venue, was generally not a problem. However, it certainly proved a challenge in one case, where one venue chose to not assist in the collection of public responses. This resulted in no public responses being gathered for that particular venue, which was naturally frustrating for ETT. That said, they were still able to gather peer and self responses which have contributed to their overall evaluation.

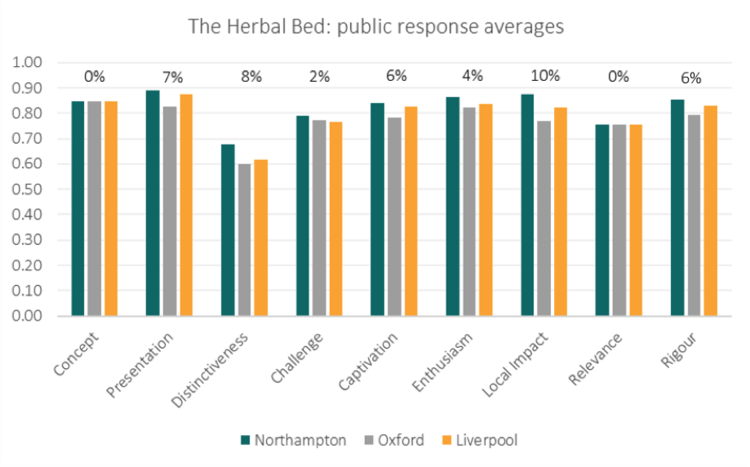

When comparing the public responses across the three locations, the shape of the graph remains constant. However, responses in Northampton were consistently higher than in Oxford and Liverpool, and generally, the lowest scoring was present in Oxford. When comparing the results between Oxford and Northampton, the dimension with the largest difference in scoring was Local Impact, with a 9% difference between the two locations. Whilst this may not seem a large difference when comparing with the average differences at 3.9%, its significance can be appreciated. Something worth highlighting here is that The Herbal Bed was co-produced with the host venue in Northampton. This collaboration might have resonated with the audience in a particular way, causing them to feel a stronger attachment to the work, as opposed to other locations and venues. There could of course be other things playing a part – this is where perhaps looking at demographics and other cultural activities in the various locations could also be of interest.

The chart below compares the different scorings across the locations and the percentage in the difference between the highest-scoring location and the lowest for public respondents is marked above the bars:

Despite the smaller sample sizes of peer and self assessors, the results they present are interesting and highlight the importance of variety within the different respondent groups.

The self assessors were from the creative team at ETT, and yet they scored the production very differently. Is this due to the fact that each self assessor focuses on a different element of the production, and therefore takes a different approach to assessment? Or is it because their individual backgrounds within the cultural sector have caused them to receive the production differently? The different perspectives revealed in the self assessment highlight the value of using multiple assessors. Results such as these broaden the discussion surrounding creative intention.

The peer assessment presents a similar reception to that of the public assessment, where the Northampton production generally scored highest. The responses from peers can be subject to how well they know the work of the organisation and their previous experience as a reviewer. The results largely mirror what could be expected in comparison with broader trends emerging from the Quality Metrics National Test: for example, the difference between the public and peer scores for the Distinctiveness dimension is large. In addition, the peer assessors tend to score lower than the self assessors.

Upon greater reflection, it seems that the significance of the co-production between the host venue in Northampton and English Touring Theatre must not be underestimated, as it seems from the scores that the experience of that production was more positively felt by all the respondents.